This excerpt is part 4 - Cybernetic Cinema and Computer Films - of Youngblood's seminal book "Expanded Cinema" (1970). The whole book can be downloaded from the Vasulkas' archive: http://www.vasulka.org/Kitchen/PDF_ExpandedCinema/ExpandedCinema.html

This excerpt is part 4 - Cybernetic Cinema and Computer Films - of Youngblood's seminal book "Expanded Cinema" (1970). The whole book can be downloaded from the Vasulkas' archive: http://www.vasulka.org/Kitchen/PDF_ExpandedCinema/ExpandedCinema.html

THE HUMAN BIO-COMPUTER AND HIS ELECTRONIC BRAINCHILD

The verb "to compute" in general usage means to calculate. A computer, then, is any system capable of accepting data, applying prescribed processes to them, and supplying results of these processes. The first computer, used thousands of years ago, was the abacus.

There are two types of computer systems: those that measure and those that count. A measuring machine is called an analogue computer because it establishes analogous connections between the measured quantities and the numerical quantities supposed to

represent them. These measured quantities may be physical distances, volumes, or amounts of energy. Thermostats, rheostats, speedometers, and slide rules are examples of simple analogue computers.

A counting machine is called a digital computer because it consists entirely of two-way switches that perform direct, not analogous, functions. These switches operate with quantities expressed directly as digits or discrete units of a numerical system known as the binary system.7 This system has 2 as its base. (The base of the decimal system is 10, the base of the octal system is 8, the base of the hexadecimal system is 16, and so on.) The binary code used in digital computers is expressed in terms of one and zero (1-0), representing on or off, yes or no. In electronic terms its equivalent is voltage or no voltage. Voltages are relayed through a sequence of binary switches in which the opening of a later switch depends on the action of precise combinations of earlier switches leading to it.

The term binary digit usually is abbreviated as bit, which is used also as a unit of measurement of information. A computer is said to have a "million-bit capacity," or a laser hologram is described as requiring 109 bits of information to create a three-dimensional image.

The largest high-velocity digital computers have a storage capacity from four thousand to four million bits consisting of twelve to forty-eight digits each. The computer adds together two forty-eight digit numbers simultaneously, whereas a man must add each pair of digits successively. The units in which this information is stored are called ferrite memory cores. As the basic component of the electronic brain, the ferrite memory core is equivalent to the neuron,

the fundamental element of the human brain, which is also a digital computer. The point at which a nerve impulse passes from one neuron to another is called a synapse, which measures about 0.5 micron in diameter. Through microelectronic techniques of Discretionary Wiring and Large Scale Integration (LSI), circuit elements of five microns are now possible. That is, the size of the

computer memory core is approaching the size of the neuron. A complete computer function with an eight-hundred-bit memory has been constructed only nineteen millimeters squared.8

The time required to insert or retrieve one bit of information is known as memory cycle time. Whereas neurons take approximately ten milliseconds (10- 2 second) to transmit information from one to another, a binary element of a ferrite memory core can be reset in one hundred nanoseconds, or one hundred billionths of a second (10- 7 second). This means that computers are about one-hundredthousand times faster than the human brain. This is largely offset,

however, by the fact that computer processing is serial whereas the brain performs parallel processing. Although the brain conducts millions of operations simultaneously, most digital computers conduct only one computation at any one instant in time.9 Brain elements are much more richly connected than the elements in a computer. Whereas an element in a computer rarely receives simultaneous inputs from two other units, a brain cell may be simultaneously influenced by several hundred other nerve cells.10 Moreover, while the brain must sort out and select information from the nonfocused total field of the outside world, data input to a

computer is carefully pre-processed.

HARDWARE AND SOFTWARE

It is often said that computers are "extraordinarily fast and extraordinarily accurate, but they also are exceedingly stupid and therefore have to be told everything." This process of telling the computer everything is called computer programming. The hardware of the human bio-computer is the physical cerebral cortex, its neurons and synapses. The software of our brain is its logic or intelligence, that which animates the physical equipment. That is to

say, hardware is technology whereas software is information. The software of the computer is the stored set of instructions that controls the manipulation of binary numbers. It usually is stored in the form of punched cards or tapes, or on magnetic tape. The process by which information is passed from the human to the machine is called computer language. Two of the most common computer languages are Algol derived from "Algorithmic Language,"

and Fortran, derived from "Formula Translation."

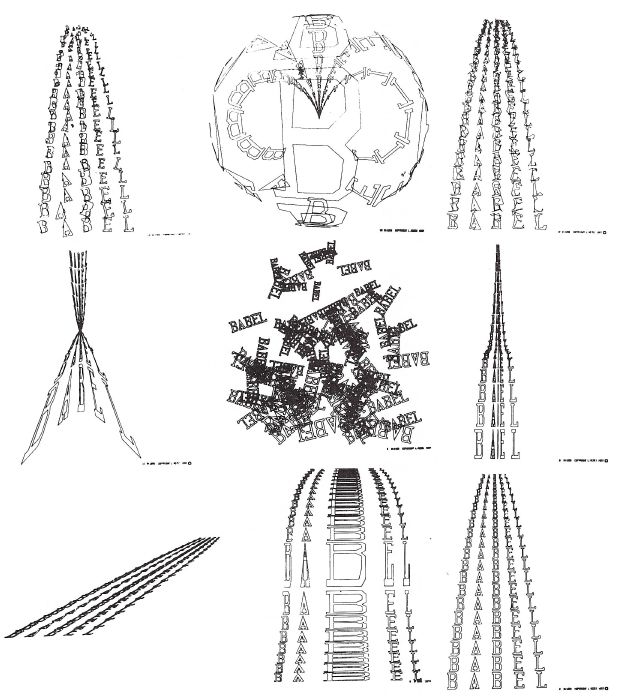

The basis of any program is an algorithm— a prescribed set of rules that define the parameters, or discrete characteristics, of the solution to a given problem. The algorithm is the solution, as opposed to the heuristics or methods of finding a solution. In the case of computer-generated graphic images, the problem is how to create a desired image or succession of images. The solution usually is in the form of polar equations with parametric controls for straight lines, curves, and dot patterns.

Computers can be programmed to simulate "conceptual cameras" and the effects of other conceptual filmmaking procedures. Under a grant from the National Science Foundation in 1968, electrical engineers at the University of Pennsylvania produced a forty-minute instructional computer film using a program that described a "conceptual camera," its focal plane and lens angle, panning and zoom actions, fade-outs, double-exposures, etc. A program of "scenario description language" was written which, in effect, stored fifty years of moviemaking techniques and concepts into an IBM 360-65 computer.11

In the last decade seventy percent of all computer business was in the area of central processing hardware, that is, digital computers themselves. Authorities estimate that the trend will be completely reversed in the coming decade, with seventy percent of profits being made in software and the necessary input-output terminals. At present, software equals hardware in annual sales of approximately $6.5 billion, and is expected to double by 1975.12 Today machines read printed forms and may even decipher handwriting. Machines "speak" answers to questions and are voiceactuated.

Computers play chess at tournament level. In fact, one of the first instances of a computer asking itself an original question occurred in the case of a machine programmed to play checkers and

backgammon simultaneously. A situation developed in which it had to make both moves in one reset cycle and thus had to choose between the two, asking itself: "Which is more important, checkers or backgammon?" It selected backgammon on the grounds that more affluent persons play that game, and since the global trend is toward more wealth per each world person, backgammon must take priority.13

Machine tools in modern factories are controlled by other machines, which themselves have to be sequenced by higher-order machines. Computer models can now be built that exhibit many of

the characteristics of human personality, including love, fear, and anger. They can hold beliefs, develop attitudes, and interact with other machines and human personalities. Machines are being developed that can manipulate objects and move around autonomously in a laboratory environment. They explore and learn, plan strategies, and can carry out tasks that are incompletely specified.14

So-called learning machines such as the analogue UCLM II from England, and the digital Minos II developed at Stanford University, gradually are phasing out the prototype digital computer. A learning machine has been constructed at the National Physical Laboratory that learns to recognize and to associate differently shapedshadows which the same object casts in different positions.15 These new electronic brains are approaching speeds approximately one million

times faster than the fastest digital computers. It is estimated that the next few generations of learning machines will be able to perform in five minutes what would take a digital computer ten years. The significance of this becomes more apparent when we realize that a digital computer can process in twenty minutes information equivalent to a human lifetime of seventy years at peak performance. 16

N. S. Sutherland: "There is a real possibility that we may one day be able to design a machine that is more intelligent than ourselves. There are all sorts of biological limitations on our own intellectual capacity ranging from the limited number of computing elements we have available in our craniums to the limited span of human life and the slow rate at which incoming data can be accepted. There is no reason to suppose that such stringent limitations will apply to computers of the future... it will be much easier for computers to bootstrap themselves on the experience of previous computers than it is for man to benefit from the knowledge acquired by his predecessors. Moreover, if we can design a machine more intelligent than ourselves, then a fortiori that machine will be able to design one more intelligent than itself.''17

The number of computers in the world doubles each year, while computer capabilities increase by a factor of ten every two or three years. Herman Kahn: "If these factors were to continue until the end of the century, all current concepts about computer limitations will have to be reconsidered. Even if the trend continues for only the next decade or so, the improvements over current computers would be factors of thousands to millions... By the year 2000 computers are

likely to match, simulate or surpass some of man's most 'human-like' intellectual abilities, including perhaps some of his aesthetic and creative capacities, in addition to having new kinds of capabilities that human beings do not have... If it turns out that they cannot duplicate or exceed certain characteristically human capabilities, that will be one of the most important discoveries of the twentieth century.''18

Dr. Marvin Minsky of M.I.T. has predicted: "As the machine improves... we shall begin to see all the phenomena associated with the terms 'consciousness,' 'intuition' and 'intelligence.' It is hard to say how close we are to this threshold, but once it is crossed the world will not be the same... it is unreasonable to think that machines could become nearly as intelligent as we are and then stop, or to suppose that we will always be able to compete with them in wit and wisdom. Whether or not we could retain some sort of control of the machines— assuming that we would want to— the nature of our activities and aspirations would be changed utterly by the presence

on earth of intellectually superior entities.''19 But perhaps the most portentous implication in the evolving symbiosis of the human biocomputer and his electronic brainchild was voiced by Dr. Irving John Good of Trinity College, Oxford, in his prophetic statement: "The first ultra-intelligent machine is the last invention that man need make."20

THE AESTHETIC MACHINE

As the culmination of the Constructivist tradition, the digital computer opens vast new realms of possible aesthetic investigation. The poet Wallace Stevens has spoken of "the exquisite environment of face."

Conventional painting and photography have explored as much of that environment as is humanly possible. But, as with other hidden realities, is there not more to be found there? Do we not intuit something in the image of man that we never have been able to express visually? It is the belief of those who work in cybernetic art that the computer is the tool that someday will erase the division between what we feel and what we see. Aesthesic application of technology is the only means of achieving new consciousness to match our new environment. We certainly are not going to love computers that guide SAC missiles. We surely do not feel warmth toward machines that analyze marketing trends. But perhaps we can learn to understand the beauty of a machine that produces the kind of visions we see in expanded cinema.

It is quite clear in what direction man's symbiotic relation to the computer is headed: if the first computer was the abacus, the ultimate computer will be the sublime aesthetic device: a parapsychological instrument for the direct projection of thoughts and emotions. A. M. Noll, a pioneer in three-dimensional computer films at Bell Telephone Laboratories, has some interesting thoughts on the subject: "...the artist's emotional state might conceivably be determined by computer processing of physical and electrical signals from the artist (for example, pulse rate and electrical activity of the brain). Then, by changing the artist's environment through such external stimuli as sound, color and visual patterns, the computer

would seek to optimize the aesthetic effect of all these stimuli upon the artist according to some specified criterion... the emotional reaction of the artist would continually change, and the computer would react accordingly either to stabilize the artist's emotional state or to steer it through some pre-programmed course. One is strongly tempted to describe these ideas as a consciousness-expanding experience in association with a psychedelic computer... current successive stereo pairs from a film by A. Michael Noll of Bell Telephone Laboratories, demonstrating the rotation, on four mutually perpendicular axes, of a four-dimensional hypercube projected onto dual two-dimensional picture planes in simulated three-dimensional space. The viewer wears special polarized glasses such as those common in 3-D movies of the early 1950's. It was an attempt to communicate an intuitive understanding of four-dimensional objects, which in physics are called hyperobjects. A computer can easily construct, in mathematical terms, a fourth spatial dimension perpendicular to our three spatial dimensions. Only a fourth digit is required for the machine to locate a point in four-dimensional space.

This chapter on computer films might be seen as an introduction to the first tentative, crude experiments with the medium. No matter how impressive, they are dwarfed by the knowledge of what computers someday will be able to do. The curious nature of the technological revolution is that, with each new step forward, so much new territory is exposed that we seem to be moving backwards. No one is more aware of current limitations than the artists themselves.

As he has done in other disciplines without a higher ordering principle, man so far has used the computer as a modified version of older, more traditional media. Thus we find it compared to the brush, chisel, or pencil and used to facilitate the efficiency of conventional

methods of animating, sculpting, painting, and drawing. But the chisel, brush, and canvas are passive media whereas the computer is an active participant in the creative process. Robert Mallary, a computer scientist involved in computer sculpture, has delineated six levels of computer participation in the creative act. In the first stage the machine presents proposals and variants for the artist's consideration without any qualitative judgments, yet the man/machine symbiosis is synergetic. At the second stage, the computer becomes an indispensable component in the production of an art that would be impossible without it, such as constructing holographic interference patterns. In the third stage, the machine makes autonomous decisions on alternative possibilities that ultimately govern the outcome of the artwork. These decisions, however, are made within parameters defined in the program. At the fourth stage the computer makes decisions not anticipated by the artist because they have not been defined in the program. This ability does not yet exist for machines. At the fifth stage, in Mallary's words, the artist "is no longer needed" and "like a child, can only get in the way." He would still, however, be able to "pull out the plug," a capability he will not possess when and if the computer ever reaches the sixth stage of "pure disembodied energy."22

Returning to more immediate realities, A. M. Noll has explained the computer's active role in the creative process as it exists today:

"Most certainly the computer is an electronic device capable of performing only those operations that it has been explicitly instructed to perform. This usually leads to the portrayal of the computer as a powerful tool but one incapable of any true creativity. However, if 'creativity' is restricted to mean the production of the unconventional or the unpredicted, then the computer should instead be portrayed as a creative medium— an active and creative collaborator with the

artist... because of the computer's great speed, freedom from error, and vast abilities for assessment and subsequent modification of programs, it appears to us to act unpredictably and to produce the unexpected. In this sense the computer actively takes over some of the artist's creative search. It suggests to him syntheses that he may or may not accept. It possesses at least some of the external attributes of creativity."23

Traditionally, artists have looked upon science as being more important to mankind than art, whereas scientists have believed the reverse. Thus in the confluence of art and science the art world is understandably delighted to find itself suddenly in the company of science. For the first time, the artist is in a position to deal directly with fundamental scientific concepts of the twentieth century. He can now enter the world of the scientist and examine those laws that describe a physical reality. However, there is a tendency to regard any computer-generated art as highly significant— even the most simplistic line drawing, which would be meaningless if rendered by hand. Conversely, the scientific community could not be more pleased with its new artistic image, interpreting it as an occasion to relax customary scientific disciplines and accept anything random as art. A solution to the dilemma lies somewhere between the polarities and surely will evolve through closer interaction of the two

disciplines.

When that occurs we will find that a new kind of art has resulted from the interface. Just as a new language is evolving from the binary elements of computers rather than the subject-predicate relation of the Indo-European system, so will a new aesthetic discipline that bears little resemblance to previous notions of art and the creative process. Already the image of the artist has changed radically.

In the new conceptual art, it is the artist's idea and not his technical ability in manipulating media that is important. Though much emphasis currently is placed on collaboration between artists and technologists, the real trend is more toward one man who is

both artistically and technologically conversant. The Whitney family, Stan VanDerBeek, Nam June Paik, and others discussed in this book are among the first of this new breed. A. M. Noll is one of them, and he has said: "A lot has been made of the desirability of collaborative efforts between artists and technologists. I, however, disagree with many of the assumptions upon which this desirability supposedly is founded. First of all, artists in general find it extremely difficult to verbalize the images and ideas they have in their minds. Hence the communication of the artist's ideas to the technologist is very poor indeed. What I do envision is a new breed of artist... a man who is extremely competent in both technology and the arts."

Thus Robert Mallary speaks of an evolving "science of art... because programming requires logic, precision and powers of analysis as well as a thorough knowledge of the subject matter and

a clear idea of the goals of the program... technical developments in programming and hardware will proceed hand in glove with a steady increase in the theoretical knowledge of art, as distinct from the intuitive and pragmatic procedures which have characterized the creative process up to now."

CYBERNETIC CINEMA

Three types of computer output hardware can be used to produce movies: the mechanical analogue plotter, the "passive" microfilm plotter and the "active" cathode-ray tube (CRT) display console.

Though the analogue plotter is quite useful in industrial and scientific engineering, architectural design, systems analysis, and so forth, it is rather obsolete in the production of aesthetically-motivated computer films. It can and is used to make animated films but is best suited for

still drawings. Through what is known as digital-to-analogue conversion, coded signals from a computer drive an armlike servomechanism that literally draws pen or pencil lines on flatbed or drum carriages. The resulting flow charts, graphs, isometric renderings, or realist images

are incrementally precise but are too expensive and time-consuming for nonscientific movie purposes. William Fetter of the Boeing Company in Seattle has used mechanical analogue plotting systems to make animated films for visualizing pilot and cockpit configurations in aircraft design. Professor Charles Csuri of Ohio State University has created "random wars" and other random and semi-random drawings using mechanical plotters for realist images.

However, practically all computer films are made with cathode-ray tube digital plotting output systems. The cathode-ray tube, like the oscilloscope, is a special kind of television tube. It's a vacuum tube in which a grid between cathode and anode poles emits a narrow beam of electrons that are accelerated at high velocity toward a phosphor-coated screen, which fluoresces at the point where the electrons strike. The resulting luminescent glow is called a "trace-point." An electromagnetic field deflects the electron beam along predetermined patterns by electronic impulses that can be broadcast, cabled, or recorded on tape. This deflection capability follows vertical and horizontal increments expressed as xy plotting coordinates. Modern three-inch

CRTs are capable of responding to a computer's "plot-point" and "draw-line" commands at a rate of 100,000 per second within a field of 16,000 possible xy coordinates— that is, approximately a million times faster and more accurate than a human draftsman. When interfaced with a digital computer, the CRT provides a visual display of electronic signal information generated by the computer program. The passive microfilm plotter is the most commonly used output system for computer movies. It's a self-contained film-recording unit

in which a movie camera automatically records images generated on the face of a three-inch CRT. The term "microfilm" is confusing to filmmakers not conversant with industrial or scientific language. It simply indicates conventional emulsion film in traditional 8mm., 16mm., or 35mm. formats, used in a device not originally intended for the production of motion pictures, but rather still pictures for compact storage of large amounts of printed or pictorial information. Users of microfilm plotters have found, however, that their movieproducing capability is at least as valuable as their storage-andretrieval capability. Most computer films are not aestheticallymotivated. They are made by scientists, engineers, and educators to

facilitate visualization and rapid assimilation of complex analytic and abstract concepts.

In standard cinematography the shutter is an integral part of the camera's drive mechanism, mechanically interlocked with the advance-claws that pull successive frames down to be exposed. But cameras in microfilm plotters such as the Stromberg-Carlson 4020 or

the CalComp 840 are specially designed so that the shutter mechanism is separate from the film pull-down. Both are operated automatically, along with the CRT display, under computer program control.

Some computer films, particularly those of John Whitney, are made with active twenty-one-inch CRTs such as the IBM 2250 Display Console with its light pen, keyboard inputs, and functional

keys, whose use will be described in more detail later on. This arrangement is not a self-contained filmmaking unit; rather, a specially modified camera is set up in front of the CRT under automatic synchronous control of a computer program. This system is called "active" as opposed to the "passive" nature of the microfilm plotter because the artist can feed commands to the computer through the CRT by selecting variables with the light pen and the function

keyboard, thus "composing" the picture in time as sequences develop (during filming, however, the light pen is not used and the CRT becomes a passive display of the algorithm). Also, until recently the display console was the only technique that allowed the artist to see the display as it was being recorded; recent microfilm plotters, however, are equipped with viewing monitors.

Since most standard microfilm plotters were not originally intended for the production of motion pictures, they are deficient in at least two areas that can be avoided by using the active CRT. First, film registration in microfilm plotters does not meet quality standards of the motion-picture industry since frame-to-frame steadiness is not a primary consideration in conventional microfilm usage. Second, most microfilm plotters are not equipped to accept standard thousand-foot core-wound rolls of 35mm, film, which of course is possible with magazines of standard, though control-modified, cameras used to photograph active CRTs. Recently, however, computer manufacturing firms such as Stromberg-Carlson have designed cameras and microfilm plotters that meet all qualifications of the motion-picture industry as the use of computer graphics becomes increasingly popular in television commercials and large animation firms. Passive CRT systems are preferred over active consoles for various reasons. First, the input

capabilities of the active scope are rarely used in computer animation. Second, passive CRTs come equipped with built-in film recorders. Third, a synchronization problem can arise when filming from an active CRT scope caused by the periodic "refreshing" of the image. This is similar to the "rolling" phenomenon that often occurs in the filming of a televised program. The problem is avoided in passive systems since each frame is drawn only once and the camera shutter remains open while the frame is drawn.

The terms "on-line," "off-line," and "real time" are used in describing computer output systems. Most digital plotting systems are designed to operate either on-line or off-line with the computer.

In an on-line system, plot commands are fed directly from the computer to the CRT. In an off-line system, plot commands are recorded on magnetic tape that can instruct the plotter at a later time. The term "real time" refers specifically to temporal relationships between the CRT, the computer, and the final film or the human operator's interaction with the computing system. For example, a real-time interaction between the artist and the computer is possible by drawing on the face of the CRT with the light pen. Similarly, if a movie projected at the standard 24 fps has recorded the CRT display exactly as it was drawn by the computer, this film is said to be a realtime representation of the display. A live-action shot is a real-time document of the photographed subject, whereas single-frame animation is not a real-time image, since more time was required in recording than in projecting.

Very few computer films of significant complexity are recorded in real-time operation. Only one such film, Peter Kamnitzer's City-Scape, is discussed in this book. This is primarily because the hardware necessary to do real-time computer filmmaking is rare and prohibitively expensive, and because real-time photography is not of crucial importance in the production of aesthetically-motivated films.

In the case of John Whitney's work, for example, although the imagery is reconceived for movie projection at 24 fps, it is filmed at about 8 fps. Three to six seconds are usually required to produce one image, and a twenty-second sequence projected at 24 fps may require thirty minutes of computer time to generate.

Most CRT displays are black-and-white. Although the Sandia Corporation and the Lawrence Radiation Laboratory have achieved dramatic results with full-color CRTs, the color of most computer films is added in optical printing of original black-and-white footage, or else colored filters can be superposed over the face of the CRT

during photography. Full color and partial color displays are available.

As in the case of City-Scape, however, a great deal of color quality is lost in photographing the CRT screen. Movies of color CRT displays invariably are washed-out, pale, and lack definition. Since black-and-white film stocks yield much higher definition than color film stocks, most computer films are recorded in black-and-white with color added later through optical printing.

A similar problem exists in computer-generated realistic imagery in motion. It will be noted that most films discussed here are nonfigurative, non-representational, i.e., concrete. Those films which do contain representational images— City-Scape, Hummingbird— are rather crude and cartoon-like in comparison with conventional

animation techniques. Although computer films open a new world of language in concrete motion graphics, the computer's potential for manipulation of the realistic image is of far greater relevance for both artist and scientist. Until recently the bit capacity of computers far outstripped the potentials of existing visual subsystems, which did not have the television capability of establishing a continuous scan on the screen so that each point could be controlled in terms of

shading and color. Now, however, such capabilities do exist and the tables are turned; the bit capacity necessary to generate televisionquality motion images with tonal or chromatic scaling is enormously beyond present computer capacity.

Existing methods of producing realistic imagery still require some form of realistic input. The computer does not "understand" a command to make this portion of the picture dark gray or to give that line more "character." But it does understand algorithms that describe the same effects. For example, L. D. Harmon and Kenneth

Knowlton at Bell Telephone have produced realistic pictures by scanning photographs with equipment similar to television cameras.

The resulting signals are converted into binary numbers representing brightness levels at each point. These bits are transferred to magnetic tape, providing a digitized version of the photograph for computer processing. Brightness is quantized into eight levels of density represented by one of eight kinds of dots or symbols. They appear on the CRT in the form of a mosaic representation of the original photograph. The process is both costly and time consuming, with far less "realistic" results than conventional procedures.

The Computer Image Company of Denver, Colorado, has devised two unique methods of producing cartoon-like representational computer graphics in real-time, on-line operation. Using special hybrid systems with the advantages of both digital and analogue computers, they generate images through optical scanning or acoustical and anthropometric controls. In the scanning process, called Scanimate, a television camera scans black-and-white or color transparencies; this signal is input to the Scanimate computer where it is segmented into as many as five different parts, each

capable of independent movement in synchronization with any audio track, either music or commentary. The output is recorded directly onto film or videotape as an integral function of the Scanimate process.

The second computer image process, Animac, does not involve optical scanning. It generates its own images in conjunction with acoustical or anthropometric analogue systems. In the first instance the artist speaks into a microphone that converts the electrical signals into a form that modulates the cartoon image on the CRT.

The acoustical input animates the cartoon mouth while other facial characteristics are controlled simultaneously by another operator. In the second method an anthropometric harness is attached to a person— a dancer, for example— with sensors at each of the skeletal joints. If the person moves his arm the image moves its arm; when the person dances the cartoon character dances in real-time synchronization, with six degrees of freedom in simulated threedimensional space. It should be stressed that these cartoon images are only "representational" and not "realistic." The systems were designed specifically to reduce the cost of commercial filmmaking

and not to explore serious aesthetic potentials. It's obvious, however, that such techniques could be applied to artistic investigation and to nonobjective graphic compositions.

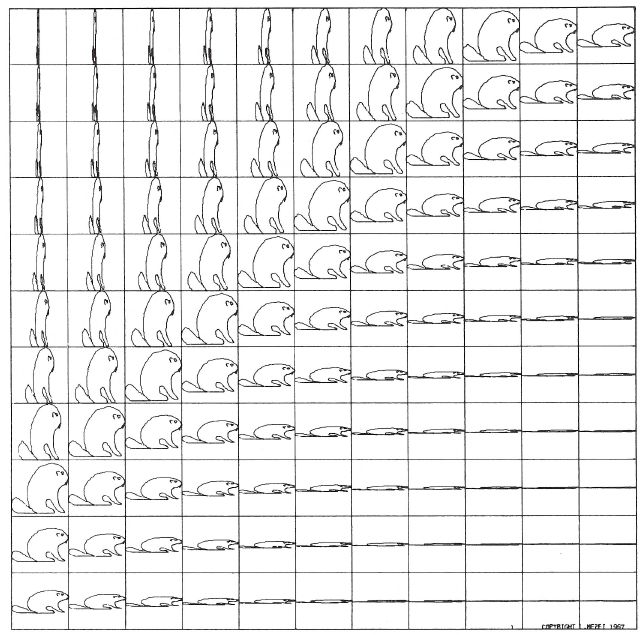

Professor Charles Csuri's computer film, Hummingbird, was produced by digital scanning of an original hand-drawing of the bird. The computer translated the drawing into xy plotting coordinates and processed variations on the drawing, assembling, disassembling, and distorting its perspectives. Thus the images were not computergenerated so much as computer-manipulated. There's no actual animation in the sense of separately-moving parts. Instead a static image of the bird is seen in various perspectives and at times is distorted by reversals of the polar coordinates. Software requirements were minimal and the film has little value as art other than its demonstration of one possibility in computer graphics.

Limitations of computer-generated realistic imagery exist both in the central processing hardware as well as visual output subsystems. Advancements are being made in output subsystems that go beyond the present bit-capacity of most computers. Chief among these is the "plasma crystal" panel, which makes possible billboard

or wallsize TV receivers as well as pocket-size TV sets that could be viewed in bright sunlight. The Japanese firms of Mitsubishi and Matsushita (Panasonic) seem to be leaders in the field, each having produced workable models. Meanwhile virtually every major producer of video technology has developed its own version. One of

the pioneers of this process in the United States was Dr. George Heilmeier of RCA's David Sarnoff Research Center in Princeton, New Jersey. He describes plasma crystals (sometimes called liquid crystals) as organic compounds whose appearance and mechanical properties are those of a liquid, but whose molecules tend to form

into large orderly arrays akin to the crystals of mica, quartz, or diamonds. Unlike luminescent or fluorescing substances, plasma crystals do not emit their own light: they're read by reflected light, growing brighter as their surroundings grow brighter.

It was discovered that certain liquid crystals can be made opalescent, and hence reflecting, by the application of electric current. Therefore in manufacturing such display systems a sandwich is formed of two clear glass plates, separated by a thin

layer of clear liquid crystal material only one-thousandth of an inch thick. A reflective mirror-like conductive coating is deposited on the inside face of one plate, in contact with the liquid. On the inside of the other is deposited a transparent electrically-conductive coating of tin oxide. When an electric charge from a battery or wall outlet is applied between the two coatings, the liquid crystal molecules are disrupted and the sandwich takes on the appearance of frosted

glass. The frostiness disappears, however, as soon as the charge is removed.

In order to display stationary patterns such as letters, symbols, or still images, the coatings are shaped in accordance with the desired pattern. To display motion the conductive coatings are laid down in the form of a fine mosaic whose individual elements can be charged independently, in accordance with a scanning signal such as is presently used for facsimile, television, and other electronic displays.

To make the images visible in a dark room or outdoors at night, both coatings are made transparent and a light source is installed at the edge of the screen. In addition it is possible to reflect a strong

light from the liquid crystal display to project its images, enlarged many times, onto a wall or screen. The implications of the plasma crystal display system are vast. Since it is, in essence, a digital system composed of hundreds of thousands of discrete picture elements (PIXELS), it obviously is suitable as a computer graphics subsystem virtually without limitation if only sufficient computing capabilities existed. The bit requirements necessary for computer generation of real-time

realistic images in motion are as yet far beyond the present state of the art.

This is demonstrated in a sophisticated video-computer system developed by Jet Propulsion Laboratories in Pasadena, California, for translation of television pictures from Mars in the various Mariner projects. This fantastic system transforms the real-time TV signal into digital picture elements that are stored on special data-discs.

The picture itself is not stored; only its digital translation. The JPL video system consists of 480 lines of resolution, each line composed of 512 individual points. One single image, or "cycle," is thus defined by 245,760 points. In black-and-white, each of these points, individually selectable, can be set to display at any of 64 desired intensities on the gray scale between total black and total white.

Possible variations for one single image thus amount to 64 times 245,760. For color displays, the total image can be thought of as three in-dependent images (one for each color constituent, red, blue, and green) or can be taken as a triplet specification for each of the 480 times 512 points. With each constituent being capable of 64 different irradiating levels in the color spectrum, a theoretical total of 262,144 different color shadings are possible for any given point in

the image. (The average human eye can perceive only 100 to 200 different color shadings.) These capabilities are possible only for single motionless images. Six bits of information are required to produce each of the 245,760 points that constitute one image or cycle, and several seconds are necessary to complete the cycle. Yet JPL scientists estimate that a computing capability of at least two megacycles (two million cycles) per second would be required to generate motion with the same image-transforming capabilities.

It is quite clear that human communication is trending toward these possibilities. If the visual subsystems exist today, it's folly to assume that the computing hardware won't exist tomorrow. The notion of "reality" will be utterly and finally obscured when we reach that point. There'll be no need for "movies" to be made on location since any conceivable scene will be generated in totally convincing reality

within the information processing system. By that time, of course, movies as we know them will not exist. We're entering a mythic age of electronic realities that exist only on a metaphysical plane. Meanwhile some significant work is being done in the development of new language through computer-generated, nonrepresentational

graphics in motion. I've selected several of the most prominent artists in the field and certain films, which, though not aestheticallymotivated, reveal possibilities for artistic exploration. We'll begin with the Whitney family: John, Sr., and his brother James inaugurated a tradition; the sons John, Jr., Michael, and Mark are the first secondgeneration computer-filmmakers in history.

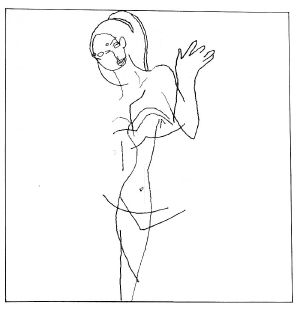

"Hunger" or "La Faim" is an 11 min 2d-computer animation by Peter Foldes, composed of hand drawn images and digital metamorphosis, nominated for the acadamy award in 1974 (didn't win).

"Hunger" or "La Faim" is an 11 min 2d-computer animation by Peter Foldes, composed of hand drawn images and digital metamorphosis, nominated for the acadamy award in 1974 (didn't win).